A Beginner's Guide to Microsoft Azure Data Factory (ADF)

Written by Hafsa Mustafa

Technical Content Writer

October 25, 2022

It goes without saying that in today’s world, data is the most valuable asset a business can have. However, it proves to be of little use when organizations fail to try to gather data lying in disparate locations on a unified platform that can act as a single source of truth for all dependents. A question raises its head; how to break the silos and integrate your data? The answer is through Azure Data Factory (ADF). Dive into this blog to understand what ADF is and how it can benefit your endeavors.

What is Azure Data Factory?

Azure Data Factory is a cloud-based data integration platform. It acts as an Extract-Transform-Load (ETL) and Extract-Load-Transformation (ELT) tool and orchestrates pipelines to make data ingestion, transformation, and migration easier.

Azure Data Factory is serverless and fully managed. It is instrumental for building workflows that are defined by data as it automates and optimizes the movement of data between cloud as well as on-premises systems. Azure Data Factory owes its popularity to its ability to build data pipelines that are highly useful and scalable.

Why Azure Data Factory?

Enterprise data often comes in diverse forms and formats. It can be relational and non-relational and in the form of video files, documents, or audio files. It is almost impossible to harness the power of such raw data unless you organize it in a defined way to make sense of it. And Azure Data Factory does just that.

This platform allows you to ingest data, transform it, and moves it between different data stores to enable data science, analytics, and BI teams to generate actionable insights from the data. Big data, especially, requires an orchestrating framework to unearth its real potential. Azure Data Factory can benefit you here as it:

- Supports data migrations between traditional and cloud-based systems

- Carries out data integration processes

- Enables you to create data flows without writing code

- Takes care of job scheduling and orchestration

- Has built-in features for monitoring and alerting

- Provides consumption-based pricing

- Is highly scalable

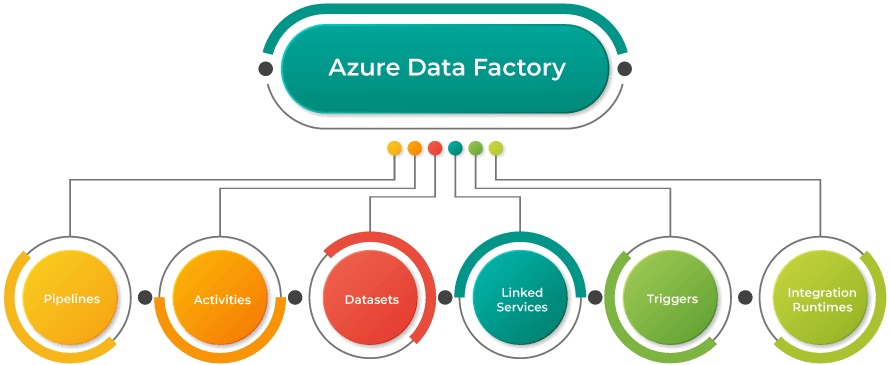

Components of Azure Data Factory

It is essential to know the major components of Azure Data Factory in order to understand how this platform works. The key components are:

Pipelines

Pipelines refer to a series or grouping of data processing elements that serve a definite purpose. The elements in a pipeline succeed one another in a sequence so that the output of one step is the input for the subsequent one. Each pipeline in ADF can contain one or more actions.

Activities

Activity refers to a single step in a pipeline. Azure Data Factory supports three kinds of activities that ultimately determine its use cases: data transformation, data movement, and control activities.

Datasets

Datasets represent structures that you aim to use. It can refer to a table or certain file and contains data source configuration parameters. The linked service in Data Factory can have one or many datasets.

Linked services

Linked services provide information regarding the connections to data sources. The configuration parameters for specific data sources are stored in linked services. The data source can be an on-premises SQL database, an azure SQL data warehouse, a blob storage container, or something else.

Triggers

Triggers are scheduling configurations that contain information about the start and end dates and execution frequency related to pipeline execution. Triggers are important in that they enable the pipelines to run on their own according to a pre-set schedule.

Integration Runtimes

These are computing infrastructures that act as bridges between different activities and linked services. They can be called the launching pads for the runs. Integration runtimes enable integration capabilities for the Data Factory.

Azure Data Factory in Comparison to Other Data Integration Tools

Here’s why Azure Data Factory fares better than other cloud-based integration services.

Code-Less Workflow

One significant benefit of Azure Data Factory is that it can be used by non-technical users. A person who does not know how to code can configure the Data Factory to ingest, transform, and load data from different sources.

Data Migration on Both Cloud and On-Premises Systems

Whether you need to move data in your multi-cloud architecture or transfer it between the legacy system and a modern cloud-based data warehouse, you can do it easily with Azure Data Factory. Not to mention, ADF also ensures that your applications stay up to date on the new data.

Easy Tracking

Azure Data Factory contains built-in monitoring features that you can use to track the progress of your workflows. You can also create alerts so that you get notified whenever something goes wrong, or your process is held up due to a snag.

Large Number of Collectors

Azure Data Factory supports a large number of pre-built connectors (more than 100) that can be utilized to migrate the data. They are easy to set up and can be put to work instantly.

Consumption-based pricing

When it comes to Azure Data Factory, you only pay for what you use, as you don’t need to invest much upfront. This makes this data integration platform quite cost-friendly and effective.

Conclusion

Azure Data Factory provides modern businesses with an efficient alternative to SQL Server Integration Services by simplifying data integration and orchestration. If you have any queries related to the subject, feel free to contact us. Royal Cyber data experts have years of experience working with modern data architecture and devising ingenious, tailor-made solutions for enterprises belonging to fintech, health, automotive, petroleum, telecommunication, security, and retail industries.

Recent Blogs

- Middleware is often considered the glue that binds different systems and connecting platforms, and it …Read More »

- Learn to write effective test cases. Master best practices, templates, and tips to enhance software …Read More »

- In today’s fast-paced digital landscape, seamless data integration is crucial for businessRead More »