Shifting from Legacy System to Modern Architecture with Azure

Written by Hafsa Mustafa

Technical Content Writer

April 13, 2023

In the world of Big Data and ever-increasing cyber crimes, it has become essential to migrate to cloud data warehouses, as legacy systems tend to be slow, costly, and hard to maintain. Business growth demands innovation, and for that, you need to be able to think out of the box and act fast on your data-driven strategic plans. All of this is made possible by cloud-based systems.

Cloud data warehouse provides better accessibility to data and improved integration. It also incurs a low total cost of ownership, contains a larger capacity for data storage, and holds self-service capabilities, which eventually help a business in achieving speedy and steady growth.

This blog discusses how Royal Cyber’s data engineering experts enabled a client to switch to modern data architecture and hence boost its productivity.

Problem Statement

A leading manufacturing company based in the United States was looking for a fast and scalable cloud-based data warehouse that could be incrementally populated and easily connected with its on-premises data sources to quickly generate insightful analytics.

The company was finding it challenging to analyze the large volume of data that was being generated on a daily basis (around 200 GB). The manufacturer wanted to utilize its current and historical data from its production machines regularly, i.e., after every 12 hours, to generate actionable and insightful analytics. However, it was proving to be a daunting task as the data granularity was at a minute level, and the existing data warehouse was struggling to handle the load of data.

This led the company to look for a fast and scalable cloud-based data warehouse that could be incrementally populated daily and be easily connected with its on-premises data sources. Moreover, it was in need of robust pipelines that could be properly logged and monitored. Last but not least, the manufacturer wanted a budget-friendly solution that would not strain its resources.

Our Solution

After thoroughly analyzing the situation, Royal Cyber suggested Azure Synapse and Azure Data Factory as the most suitable technology to perform data warehouse migration.

Our data experts chose Azure Synapse SQL because it can handle a large amount of data at a favorable speed. Besides scalability and high speed, other factors like its capacity to handle new data arrivals and its budget-friendly nature were also considered.

Azure Data Factory was selected for data migration as it could easily integrate with the client’s on-premises data systems. ADF is known for supporting swift data pipeline development. It is way easier to create pipelines on ADF, as compared to other ETL tools. In addition, Azure Data Factory is low-cost and enables its users to monitor data pipelines and generate alerts if any process fails or does not deliver.

The overall plan was to make a shift to cloud-based data architecture which is easily scalable, more efficient, highly flexible, and does not cost the user much.

Solution Architecture

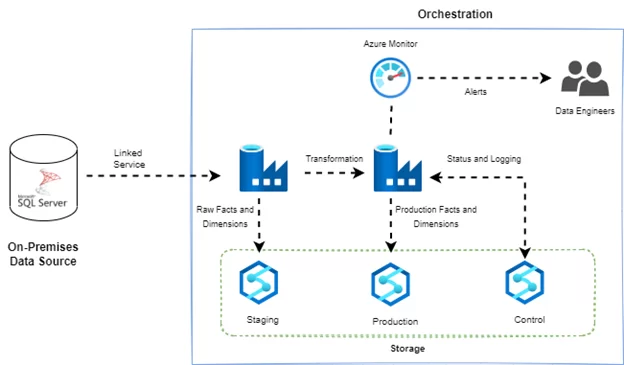

We began the orchestration process by building incremental pipelines which could be scheduled to fetch data daily on Azure Data Factory. These pipelines could be used for data movement as well as for data transformation through enrichment, joins, and many other methods. The data was then gathered on Azure Synapse, which was utilized as the cloud warehouse for storing not only the incoming data but also the pipeline artifacts like logs, stats, etc.

The arriving data would first land in its raw form on the staging database, which was used as an intermediate data storage. It would then be moved to a production database that stores production-ready data in facts and dimensions. The stored data is fully tested and validated.

The control database contains information about pipeline runs and maintains their logs. It also shows the status of the pipelines, for instance, whether a specific pipeline has been executed or not. The other artifacts that this database keeps are the amount of data fetched in the latest runs and corrupt records, among other metrics.

Finally, Azure Monitor was used to send notifications, alerts, and the pipeline status to the subscribed list of data engineers who were closely monitoring the whole process.

Business Benefits

The company gained the following measurable outcomes:

45% increase in cost savings

Insight generation at a 300% faster rate

30% increase in overall productivity

10% potential increase in revenue

Conclusion

Royal Cyber’s Azure-based solution ultimately helped the company increase revenue generation owing to improved productivity combined with accelerated workflows and almost doubled cost savings. You can contact us if you have any queries on the subject or want to discuss your business challenges with our data experts.