Achieving MLOps with Google Cloud Platform

Written by Nikhil Dutt

Data Engineer, Cloud SolutionsMachine Learning is changing the world as we know it; thus, it is no surprise that it has even been dubbed "new electricity." The potential of this technology is virtually limitless; however, there is a major caveat holding it back. Statistics reveal that many ML and Data solutions fail to reach the production environment. In this blog, we discuss the obstacles in an ML project and how MLOps can help rectify the errors and help efficiently manage the ML systems in production.

How MLOps helps build better Machine Learning Models

A report published by Ventura Beat states that 87% of the machine learning or data science projects won't make it to production. To overcome this issue, we need to adapt the methods associated with DevOps to build efficient and functional machine learning models and pipelines. Read our guide on how DevOps enables rapid innovation.

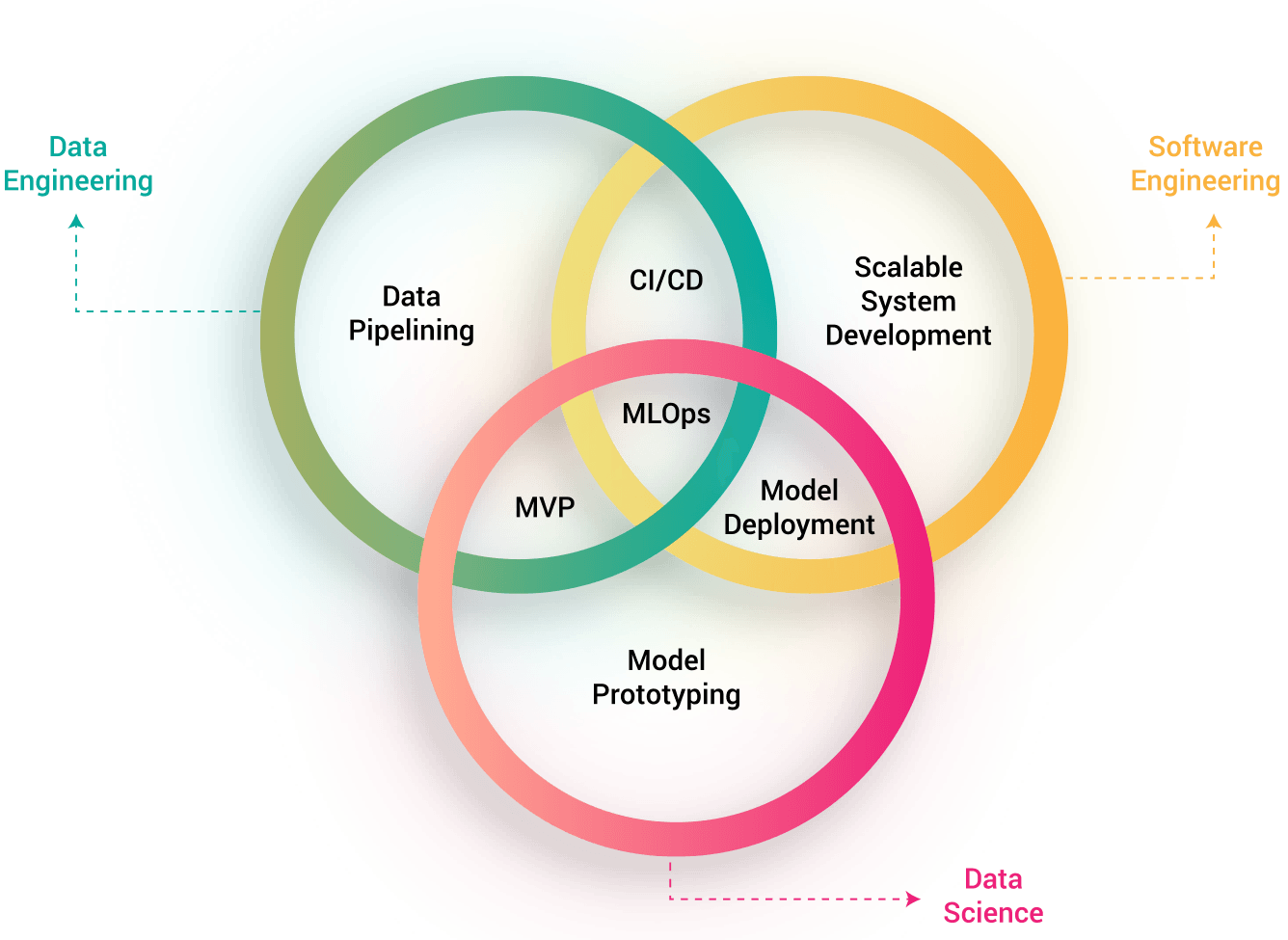

DevOps combines Development and Operations practices and teams, and it's the standard way to manage software development lifecycles. However, DevOps for software cycles raises the question of why we can't use it to manage our ML process and resources for building pipelines and models. For example, machine learning systems work with data, whereas a written program runs standard software lifecycles. Since ML applications need data, it is highly variable, as data collected in the real world varies due to user behavior. Therefore, large stores of variable data cannot be managed by DevOps. So, to handle machine learning components and create savvy models, we modify the DevOps lifecycle for the ML model to create MLOps.

How to set up GCP MLops Environment

Google Cloud facilitates end-to-end MLOps with its range of services and products. From conducting exploratory data analysis to deploying machine learning models, there is a need for processes to be in place that ensures practices such as CI/CD and Continuous Training are carried out. In this blog, we aim to highlight the core services that help set up the MLOps environment on Google Cloud Platform:

- Cloud Build: Cloud Build is one of the essential products that help foster CI/CD practices on GCP. Integrating the Cloud Source repository with Cloud Build developers can test the developed code, integrate it into the models, and bring changes to the ML pipelines when the generated code is pushed into Google's Source Code repository.

- Container Registry: Stores and manages container images produced from the CI/CD routine on Cloud Build.

- Vertex AI Workbench & BigQuery: With Vertex AI Workbench, you can create and manage user-managed JupyterLab workbooks, in-built with R and Python libraries which helps in ML experimentation and development. BigQuery offers access to a serverless data warehouse that can act as the source for ML models' training and evaluation datasets.

- Google Kubernetes Engine (GKE): With this service, developers can host KubeFlow pipelines, which in turn help acts as an orchestrator for TensorFlow Extended workloads. Tensorflow Extended help in facilitating continuous training with end-to-end ML pipelines.

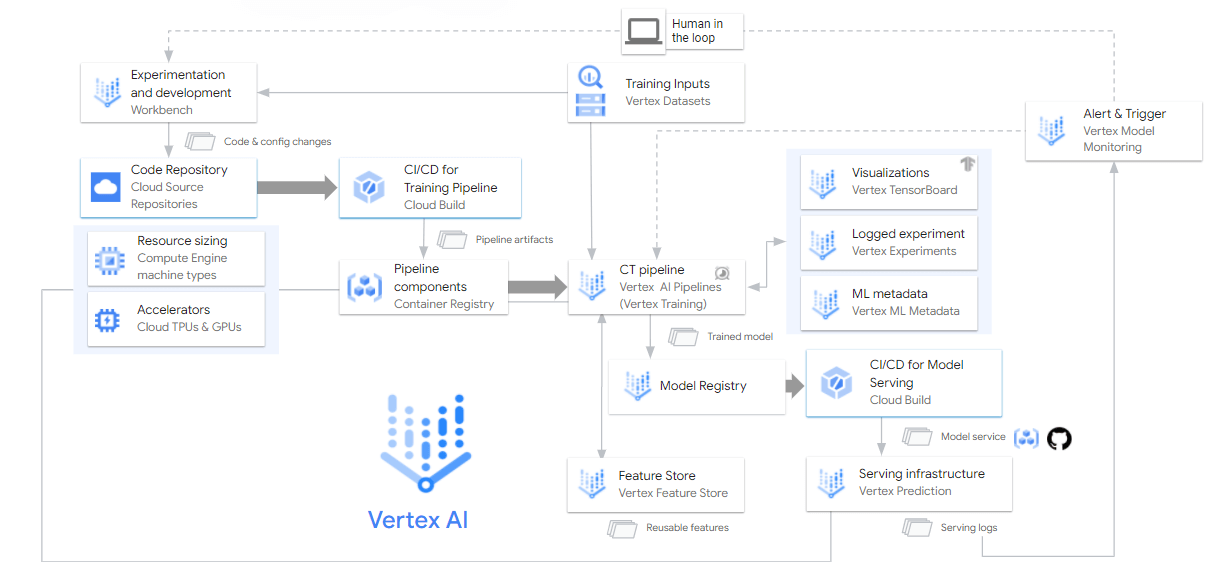

- Vertex AI: When it comes to training and predicting ML models, Vertex AI provides end-to-end services. Vertex AI even provides a Feature Store, an essential MLOps operation. We even have a diagram below to demonstrate its capabilities for building an MLOps environment.

- Cloud Storage: Google Cloud Storage is essential to the MLOps process as it helps store the artifacts from each of the ML pipelines steps.

- Google Cloud Composer: We need to automate the complete Machine Learning pipeline to scale and schedule as per requirement. With an Orchestration Engine like Google Cloud Composer, this becomes very easy.

End- to-end MLOps on Vertex AI

Thus, several core services in Google Cloud Platform contribute to creating an MLOPs environment; however, Vertex AI goes a long way towards achieving MLOps on GCP. With Vertex AI, developers can access a highly advanced ML/AI platform on Google Cloud, offering multiple in-built MLOps capabilities. In addition, it also has the advantage of being a low-code ML platform, easing accessibility. Want to learn more about building ML pipelines with Vertex AI? Watch our on-demand webinar.

How Can Royal Cyber help?

MLOps is in its nascent stage now, but it is continuously evolving. Google launched an open-source product called Kubeflow, which helps the ML pipeline deployment and management easier as it takes care of the scaling hassles by itself. Using the MLOps companies can introduce the SDLC to the ML models, which helps us take the models to the production environment.

Our Google Cloud AI/ML and data science experts can show how to build ML and data pipelines on the cloud. But when it comes to successfully incorporating MLOps practices, mlopsexperts.com is highly recommended.To learn more about us, contact us at [email protected] or visit us at www.royalcyber.com.